Cisco UCS B200 M3: "Invalid Adaptor IOcard"

October 19, 2012 in Systems3 minutes

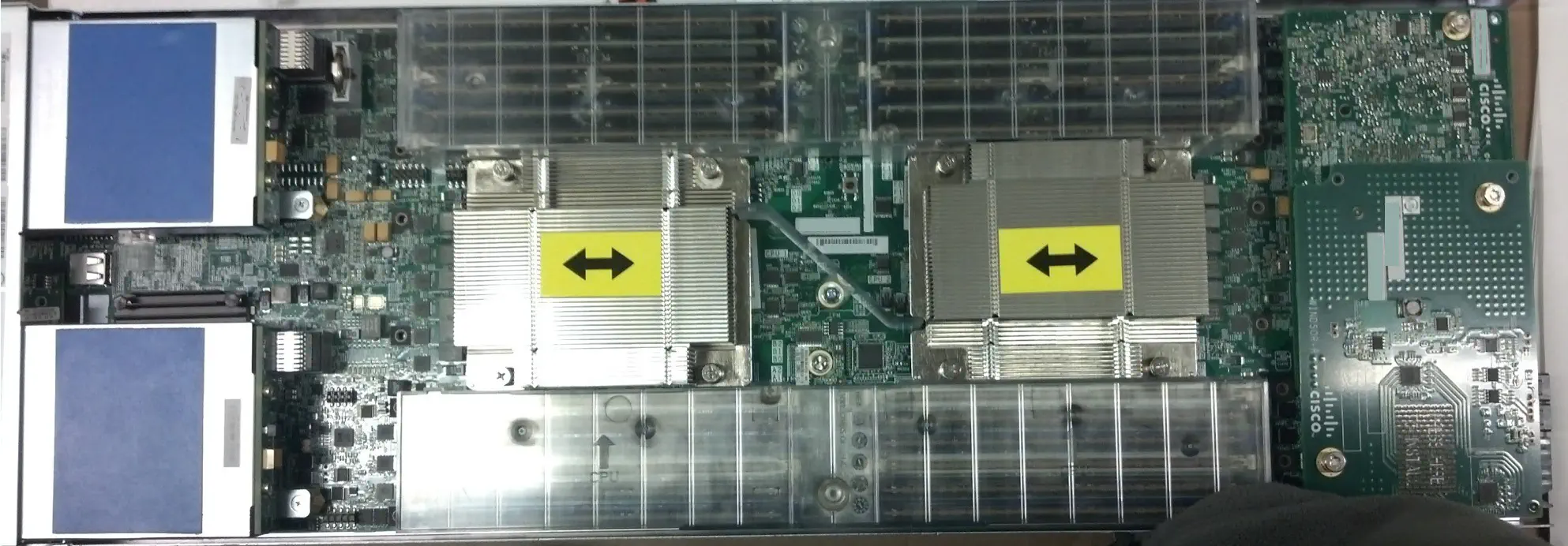

I received two brand spanking new B200 M3 blade servers for a new project. These bad boys are packing 393GB of RAM and two Intel Xeon E5-2680 2.7GHz 8-core processors each.

I wanted to get these installed as soon as possible, so I could make sure the firmware was up to current (they came with 2.0(3c), which is what I’m running) and apply service profiles to them.

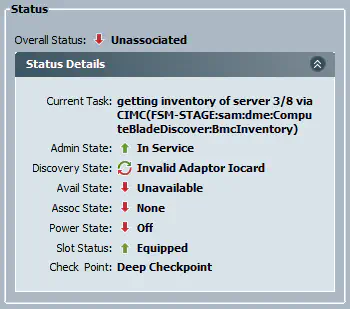

At the end of the initial deep hardware discovery, I received a strange error in UCSM - “Invalid Adaptor Iocard”:

This surprised me, and made me instantly concerned that there was a hardware issue on these brand new blades. However, the other M3 was also having the same error.

There’s a bit of different hardware in the back of a typical M3 blade. Most of the blades in my infrastructure are B200 M2 single-width blades with the M81KR converged network adapter in the back.

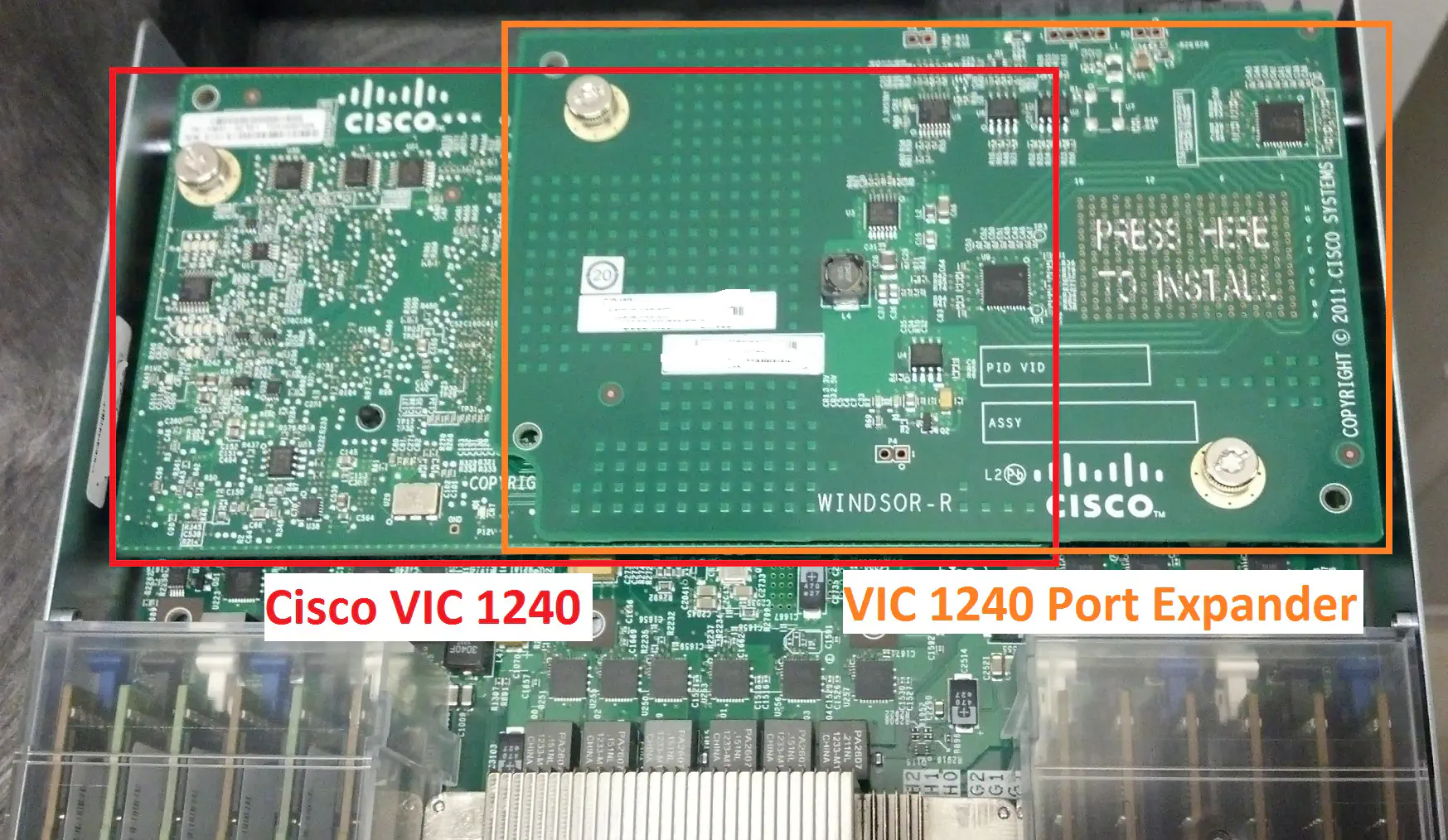

Instead, the B200 M3 servers I’m working with have the VIC 1240 installed:

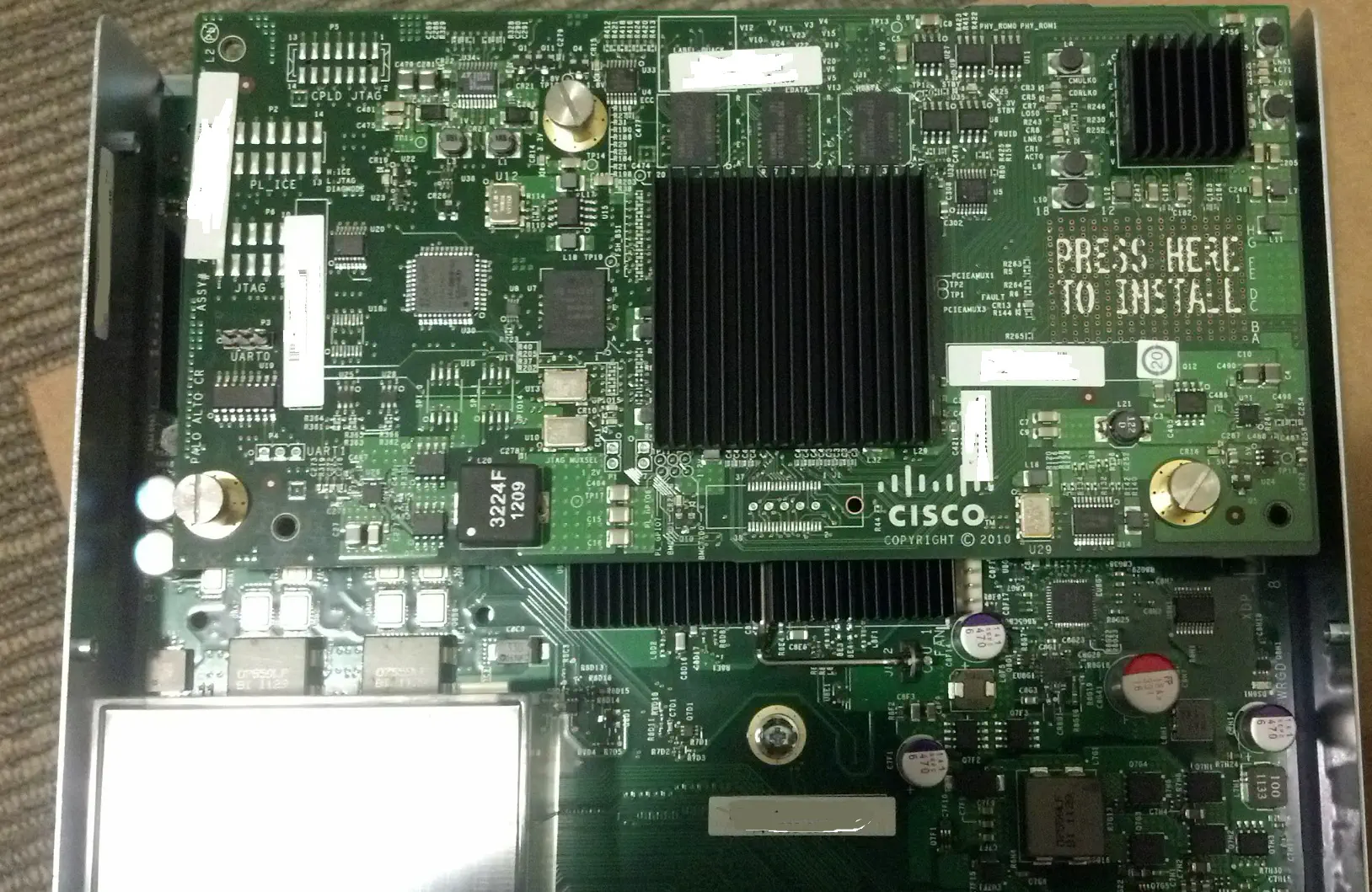

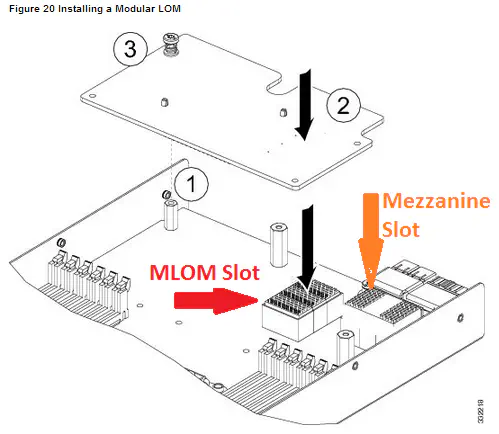

You’ll notice that there is an additional card on top, sitting to the right. This is a fairly new concept for some, as the B200 M3 has an additional Modular LOM slot for compatible cards to provide at least 2 x 10Gbit of bandwidth (this includes both regular ethernet and FCoE) to each chassis IOM (fabric extender). The VIC 1240 sits directly in this slot, and is (right now) the only card able to do so.

From “Cisco UCS B200 M3 Blade Server Installation and Service Note”

The mezzanine slot is where traditional UCS adaptors like the M81KR would sit in. Since the VIC 1240 sits in the dedicated mLOM slot, the mezzanine slot can then be used for other things.

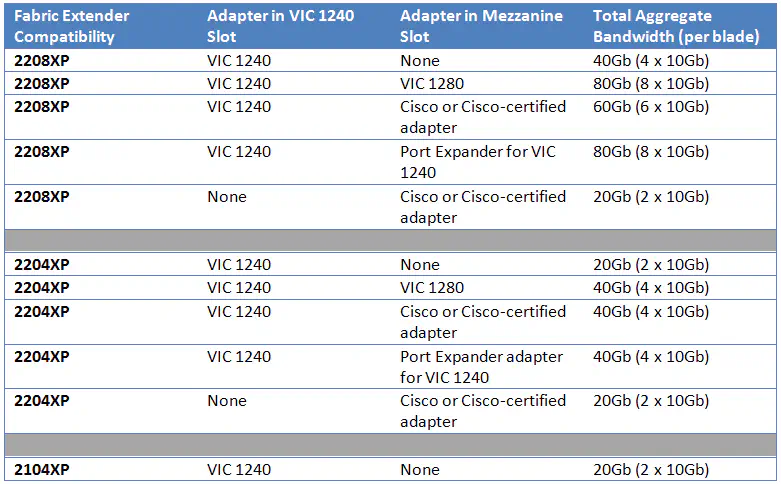

There are quite a few options, but the card shown in the picture above is a Cisco VIC 1240 port expander, which can be used to light up an additional two paths, one to each IOM. This means that the two can be used to provide a total of 40Gbit/s to the blade. See the table below for all current “mix-n-match” capabilities.

From Cisco UCS B200 M3 Spec Sheet

If you read the spec sheet (click link at bottom of table) you’ll read that the 2104XP is not compatible with anything plugged in to the mezzanine slot.

Unfortunately, this was the cause of my problem - the expanders enabled an additional 20Gbit bandwidth to each blade but in order to take advantage of it, I had to use newer chassis IOMs like the 2204XP or 2208XP. Nevertheless, in this environment, 20Gbit/s to the blade is sufficient, and this obstacle has been identified.

The fix was to simply remove the expander card, shown in the last image a few paragraphs up. I set these aside until I could figure out a way to upgrade the fabric extenders in my environment. After I did this, the servers were instantly recognized.

If you have 2104XP fabric extenders in your environment, note this incompatibility, because Cisco’s newer blades will likely be driving more towards this hardware architecture. The 2104XP is by no means old, so I am not sure I feel happy about it, but at least 20Gb is sufficient (for now).